Container-Native Architecture

Container-native?

Containers are a first class citizen.

Each container is an equal peer on the network.

Remember: your mission is not "manage VMs."

Your mission is what your application does for your organization.

Infrastructure (undifferentiated heavy lifting) is incidental cost and incidental complexity.

Application containers make the full promise of cloud computing possible...

but require new ways of working.

Let me tell you a story...

Triton Elastic Container Service

- Run Linux containers securely on bare-metal in public cloud

- Or run on-premise (it's open source!)

Director of DevOps

... in production since Oct 2013

Our problem at DF:

"Works on my machine"

Slow, non-atomic deploys

Dependency isolation

Version 1: AMIs

Bake a server image for each application for each deploy.

Deploy by standing up entirely new stack.

The Good:

Immutable infrastructure!

Blue/green deploys!

DevOps kool-aid for everyone!

The Bad and/or Ugly:

Deployments are soooo slow!

One VM per service instance -> poor utilization

Add more microservices? Add more AMIs, VMs, and ELBs!

OMGWTFBBQFML... deployments are soooo slow!

Version 2: Docker

Human-and-machine-readable build documentation.

No more "works on my machine."

Fix dependency isolation.

Interface-based approach to application deployment.

Deployments are fast! Yay!

Ok, what's wrong?

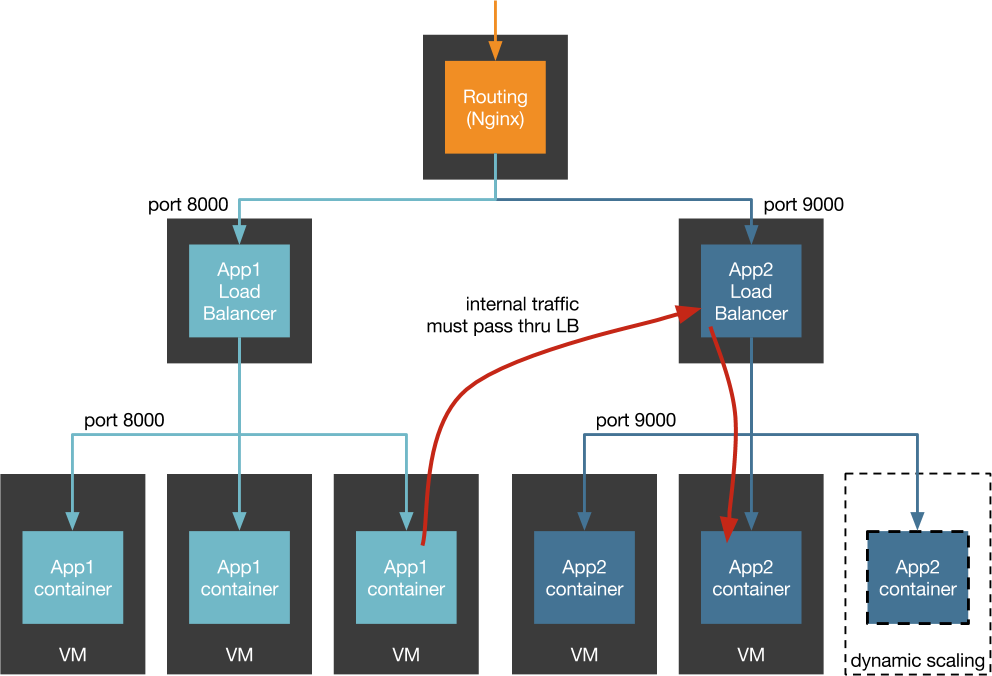

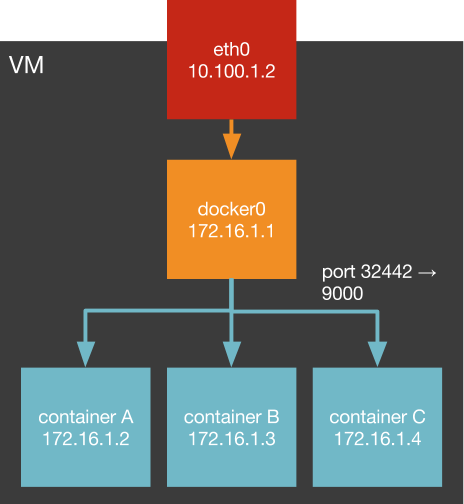

NAT

NAT

Docker's use of bridging and NAT noticeably increases the transmit path length; vhost-net is fairly efficient at transmitting but has high overhead on the receive side... In real network-intensive workloads, we expect such CPU overhead to reduce overall performance.

IBM Research Report: An Updated Performance Comparison of Virtual Machines and Linux Containers

Can we avoid NAT?

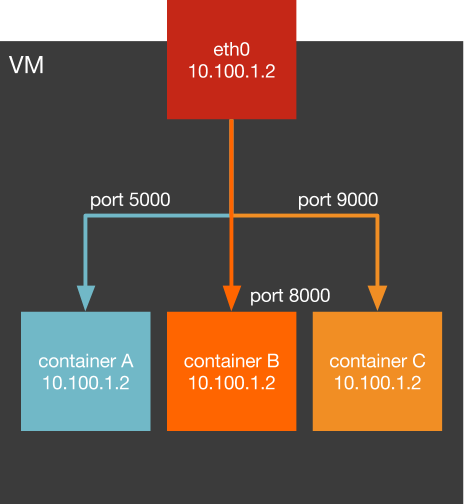

--host networking

- breaks multi-tenant security

- port conflicts

- port mapping at LB

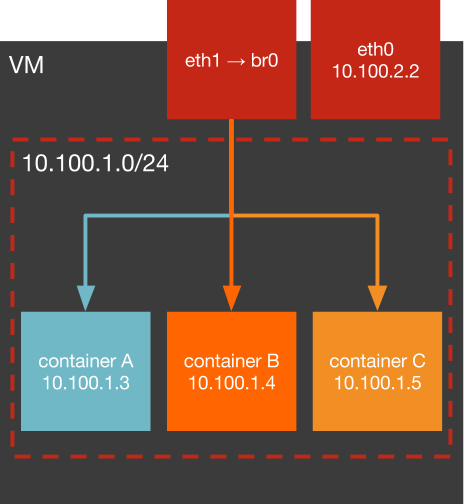

Can we avoid NAT?

Bridge (not --bridge) networking

- Can get IP per container

- May need 2nd NIC

- Scaling w/ subnet per host

Single Point of Failure

DNS

Simple discovery! But...

Can't address individual hosts behind a record.

No health checking.

TTL caching.

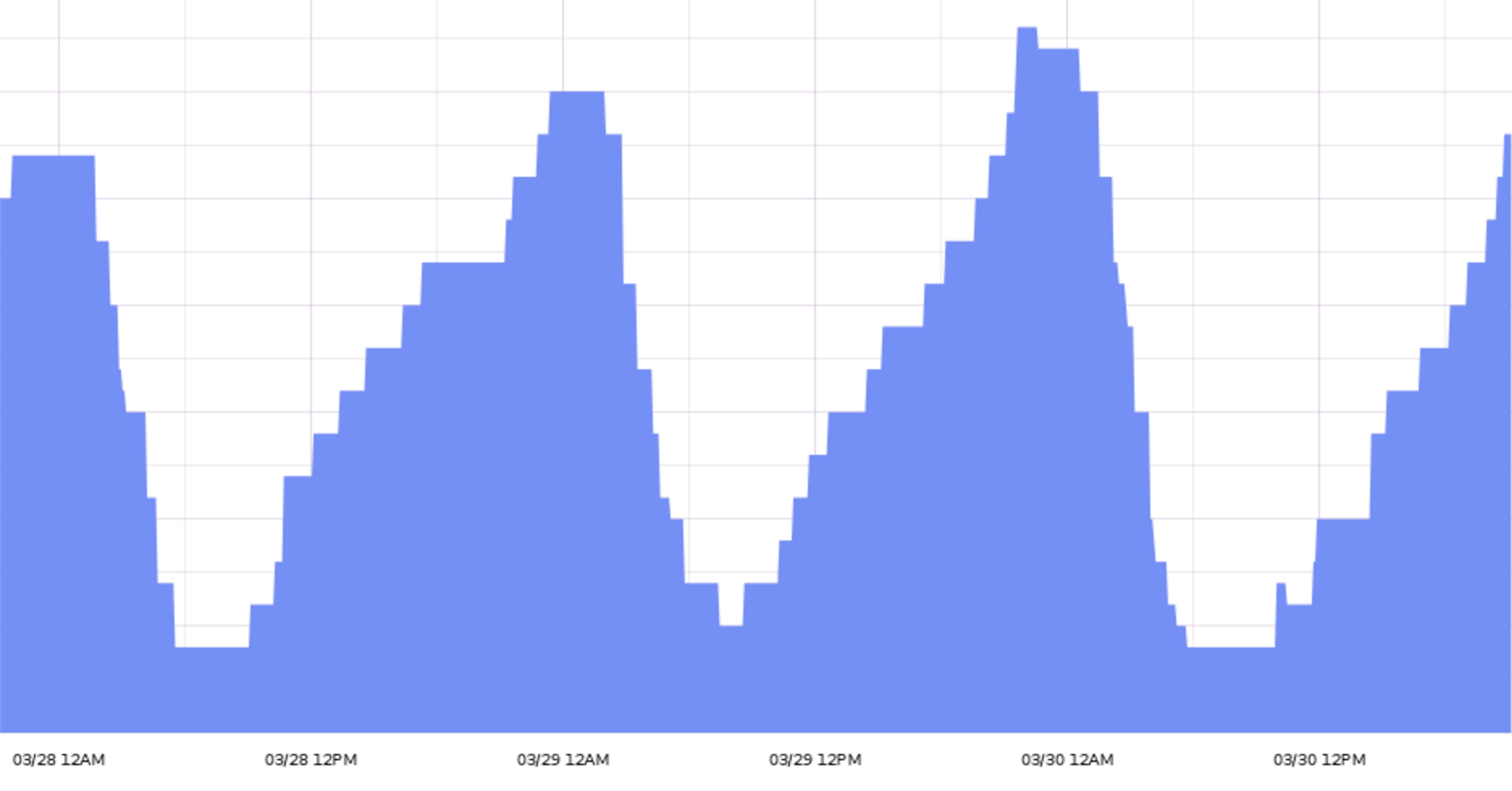

Health checks

Naive health checking == cascading failures

Health checks

Resource usage climbs...

Autoscaling triggers...

We wait for VMs to provision...

App instance blocks...

Load balancer removes app instance...

Resource usage per remaining instance climbs...

Still waiting for VMs to provision...

More app instances block...

Load balancer removes more app instances...

New VMs come online with new app instances!

New app instances are immediately over-capacity!

New app instances block...

Sadness as a service.

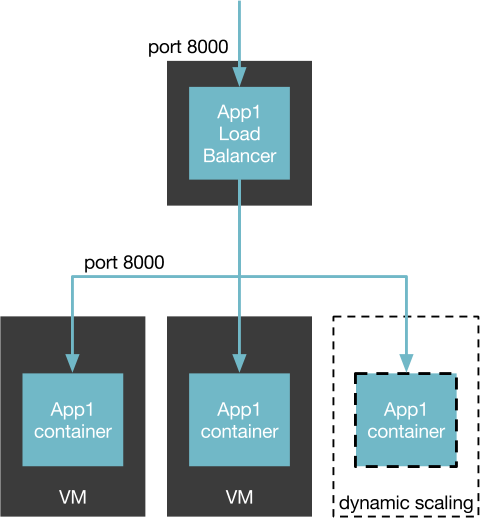

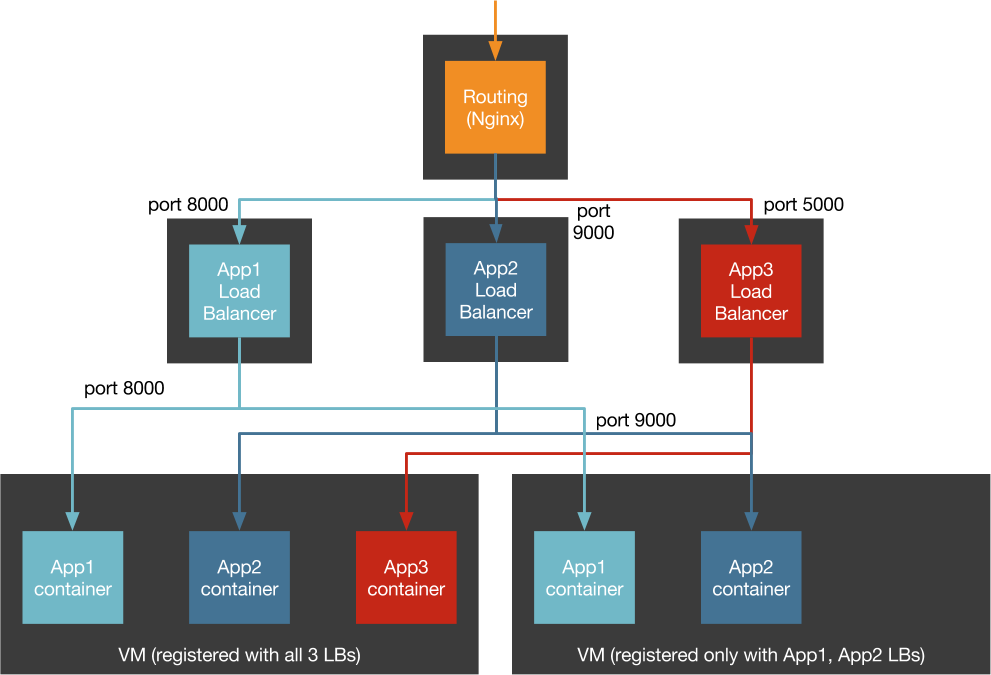

Layers

Separate life-cycle for infrastructure and applications.

Infrastructure is undifferentiated heavy lifting!

Utilization

One container per VM!

More complexity!

Separate lifecycle for VMs and app containers

Containers don't have their own IP

Pass through proxy for all outbound requests

All packets go through NAT or port forwarding

We can do better!

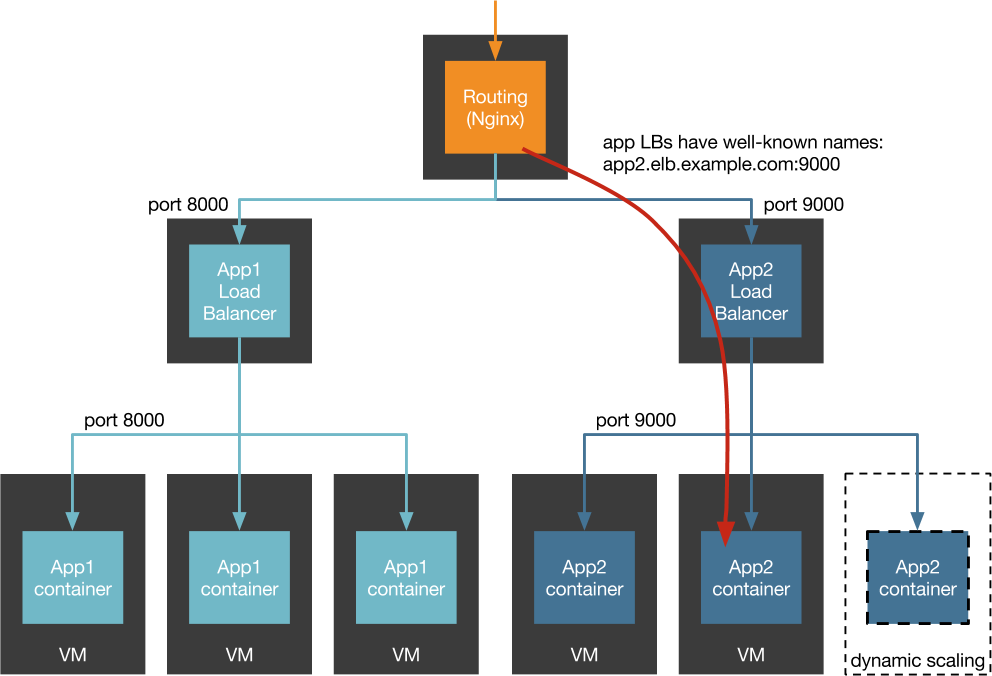

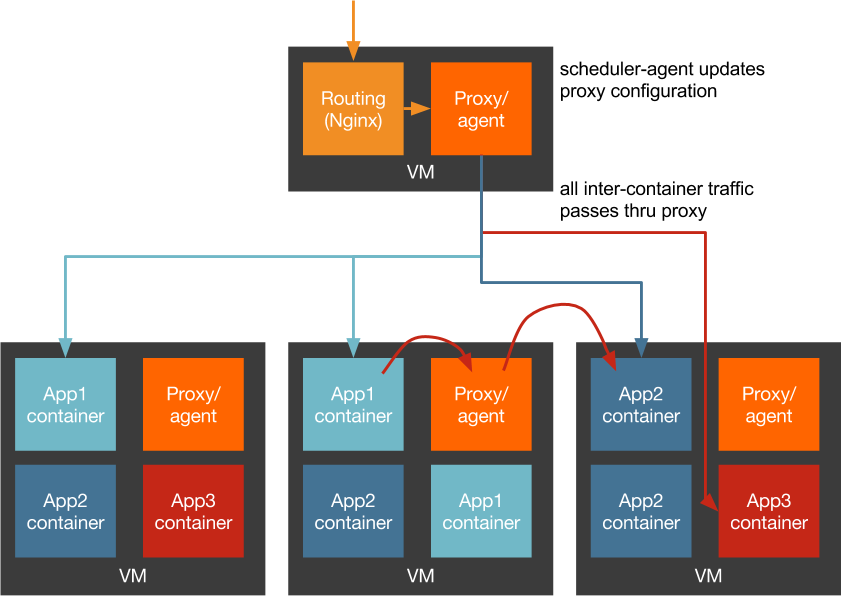

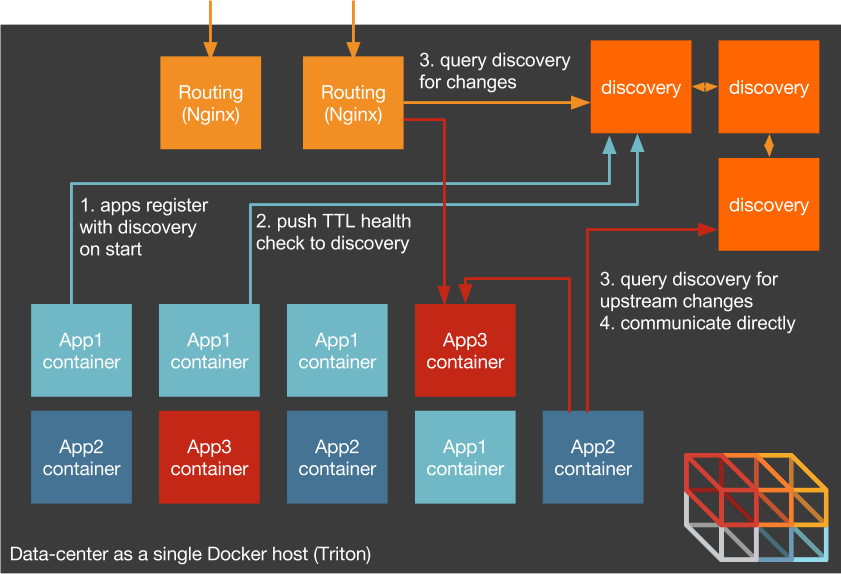

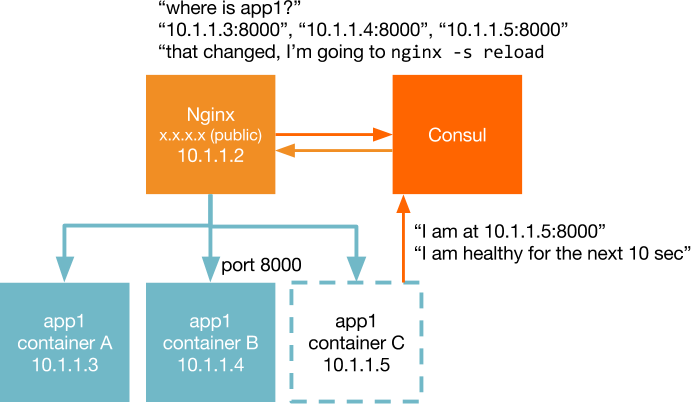

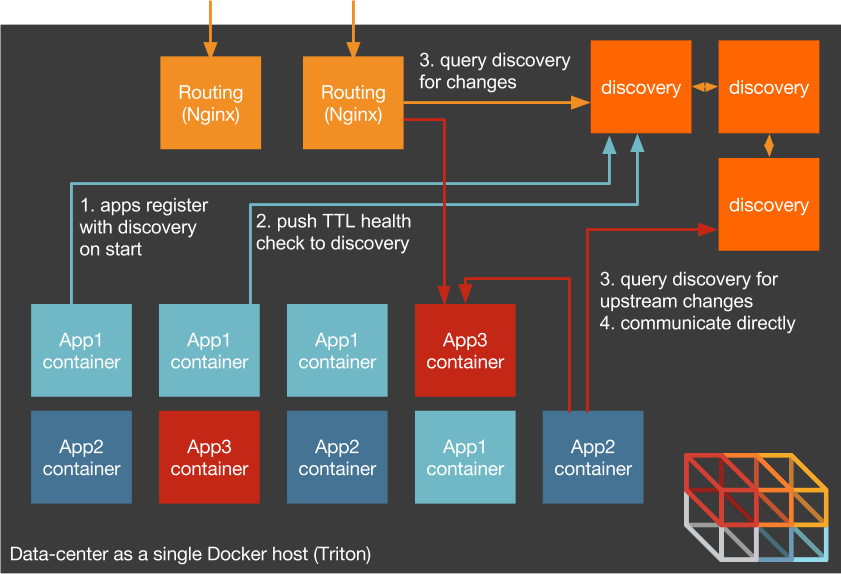

The Container-Native Alternative?

Remove the middleman!

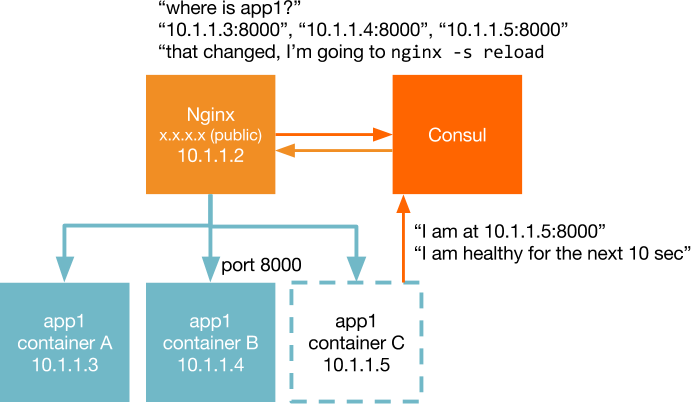

Applications ask for discovery of upstream services.

Applications tell the discovery service where to find them.

Applications report if they are healthy.

The Container-Native Alternative

Push responsibility of the application topology away from the network infrastructure and into the application itself where it belongs.

Responsibilities of a Container

Registration

Self-introspection

Heartbeats

Look for change

Respond to change

No sidecars

- Sidecar needs to reach into application container

- Unsuited for multi-tenant security

- Deployment of sidecar bound to deployment of app

Application-Aware Health Checks

No packaging tooling into another service

App container lifecycle separate from discovery service

Respond quickly to changes

Demo

Legacy Pre-Container Apps

- Registration: wrap start of app in a shell script

- Self-introspection: self-test?

- Heartbeats: um...

- Look for change: ???

- Respond to change: profit?

#!/bin/bash

# startup script for our awesomeapp

# register with consul

curl --fail -s -X PUT -d @myservice.json \

http://consul.example.com:8500/v1/agent/service/register

# start health check daemon and background it

/opt/check-health-of-awesomeapp.sh &

# run app

/opt/awesomeapp serve --bind 0.0.0.0 -p 8080

Containerbuddy to the rescue!

https://github.com/joyent/containerbuddyContainerbuddy:

A shim to help make existing apps container-native

- Registration: registers to Consul on startup

- Self-introspection: execute external health check

- Heartbeats: send TTL heathcheck to Consul

- Look for change: poll Consul for changes

- Respond to change: execute external response behavior

No Supervision

Containerbuddy is PID1

Returns exit code of shimmed process back to Docker Engine (or Triton) and dies

Attaches stdout/stderr from app to stdout/stderr of container

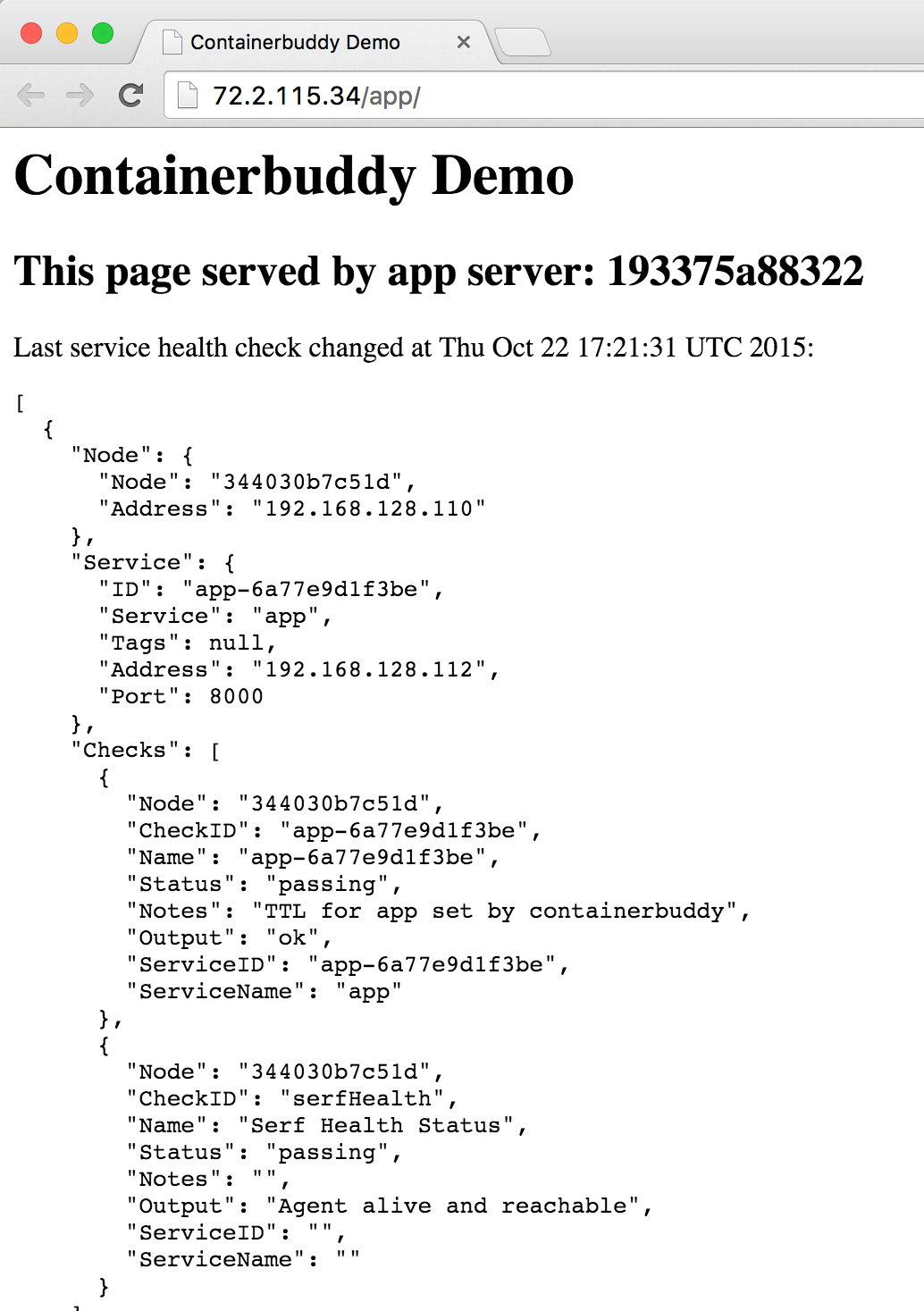

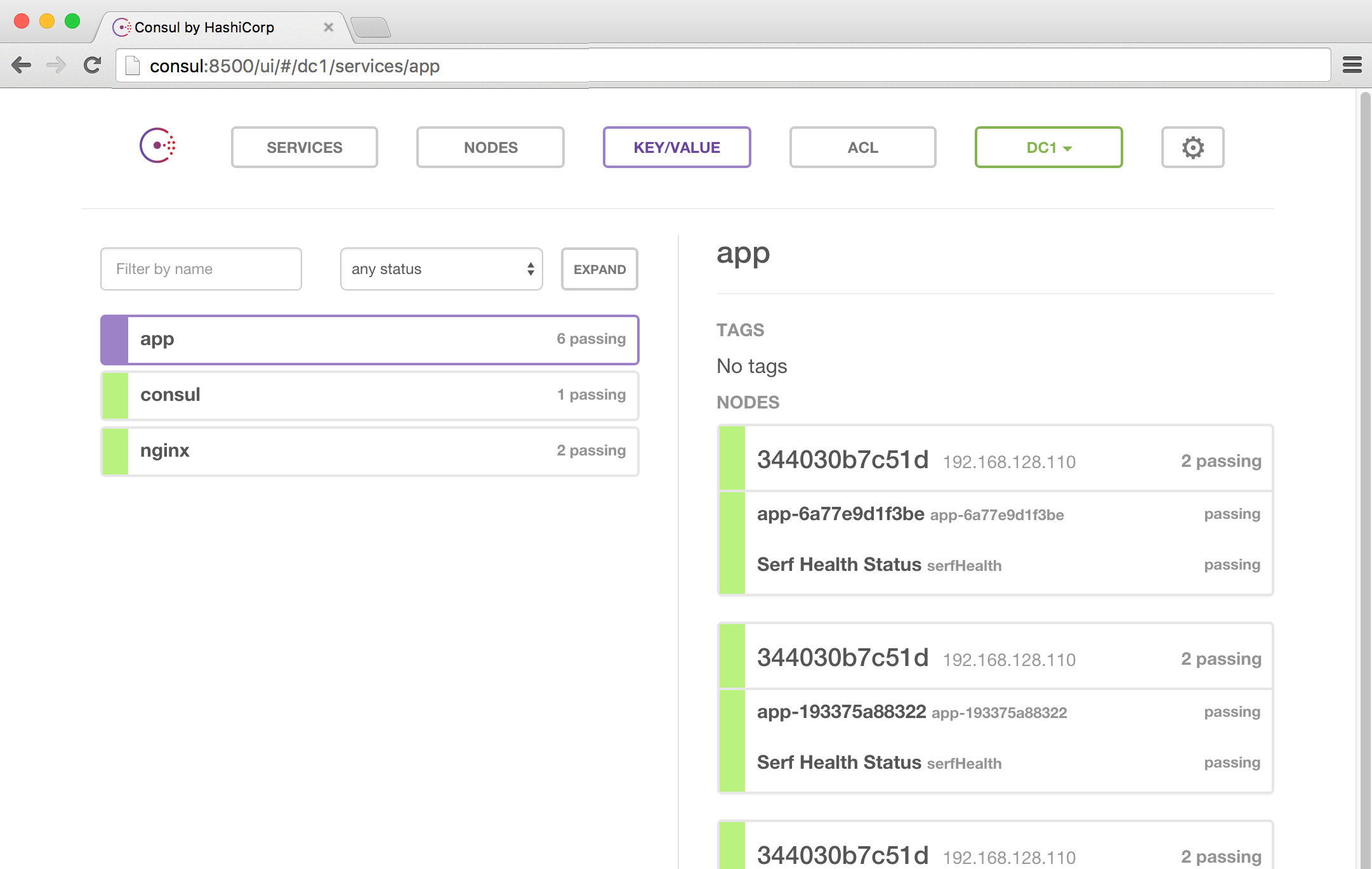

Demo App

$ cat ./app/Dockerfile

# a minimal Node.js container including containerbuddy

FROM node:slim

# install curl

RUN apt-get update && \

apt-get install -y \

curl && \

rm -rf /var/lib/apt/lists/*

# install a simple http server

RUN npm install -g json http-server

# add containerbuddy and all our configuration

ADD opt/containerbuddy /opt/containerbuddy/

$ cat ./nginx/Dockerfile

# a minimal Nginx container including containerbuddy and a

# simple virtulhost config

FROM nginx:latest

# install curl and unzip

RUN apt-get update && apt-get install -y \

curl \

unzip && \

rm -rf /var/lib/apt/lists/*

# install consul-template

RUN curl -Lo /tmp/consul_template_0.11.0_linux_amd64.zip \

https://github.com/hashicorp/consul-template/releases/download\

/v0.11.0/consul_template_0.11.0_linux_amd64.zip && \

unzip -d /bin /tmp/consul_template_0.11.0_linux_amd64.zip

# add containerbuddy and all our configuration

ADD opt/containerbuddy /opt/containerbuddy/

ADD etc/nginx/conf.d /etc/nginx/conf.d/

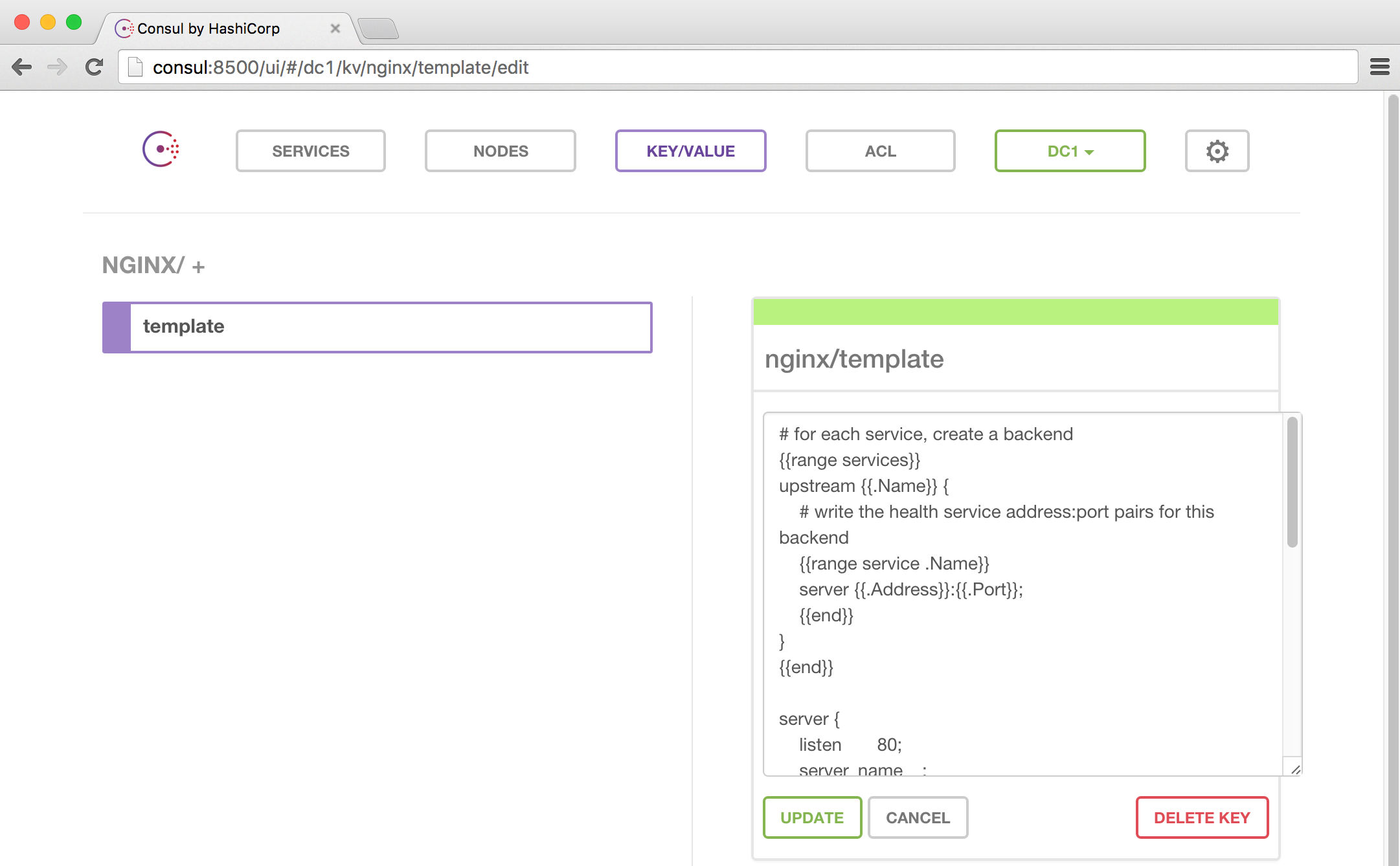

$ less ./nginx/default.ctmpl

# for each service, create a backend

{{range services}}

upstream {{.Name}} {

# write the health service address:port pairs for this backend

{{range service .Name}}

server {{.Address}}:{{.Port}};

{{end}}

}

{{end}}

server {

listen 80;

server_name _;

# need ngx_http_stub_status_module compiled-in

location /health {

stub_status on;

allow 127.0.0.1;

deny all;

}

{{range services}}

location /{{.Name}}/ {

proxy_pass http://{{.Name}}/;

proxy_redirect off;

}

{{end}}

}

$ cat ./nginx/opt/containerbuddy/reload-nginx.sh

# fetch latest virtualhost template from Consul k/v

curl -s --fail consul:8500/v1/kv/nginx/template?raw \

> /tmp/virtualhost.ctmpl

# render virtualhost template using values from Consul and reload Nginx

consul-template \

-once \

-consul consul:8500 \

-template \

"/tmp/virtualhost.ctmpl:/etc/nginx/conf.d/default.conf:nginx -s reload"

nginx:

image: 0x74696d/containerbuddy-demo-nginx

mem_limit: 512m

ports:

- 80

links:

- consul:consul

restart: always

environment:

- CONTAINERBUDDY=file:///opt/containerbuddy/nginx.json

command: >

/opt/containerbuddy/containerbuddy

nginx -g "daemon off;"

{

"consul": "consul:8500",

"services": [

{

"name": "nginx",

"port": 80,

"health": "/usr/bin/curl --fail -s http://localhost/health",

"poll": 10,

"ttl": 25

}

],

"backends": [

{

"name": "app",

"poll": 7,

"onChange": "/opt/containerbuddy/reload-nginx.sh"

}

]

}

Let's run it!

$ env | grep DOCKER

DOCKER_TLS_VERIFY=1

DOCKER_CLIENT_TIMEOUT=300

DOCKER_CERT_PATH=/Users/tim.gross/.sdc/docker/timgross

DOCKER_HOST=tcp://us-east-1.docker.joyent.com:2376

$ ./start.sh -p example

Starting example application

Pulling latest container versions

Pulling consul (progrium/consul:latest)...

latest: Pulling from progrium/consul (req 619f5eb0-78e0-11e5-a6a0-e97a8f70ea66)

...

Digest: sha256:8cc8023462905929df9a79ff67ee435a36848ce7a10f18d6d0faba9306b97274

Status: Image is up to date for progrium/consul:latest

Pulling nginx (0x74696d/containerbuddy-demo-nginx:latest)...

latest: Pulling from 0x74696d/containerbuddy-demo-nginx (req 63a60420-78e0-11e5-a6a0-e97a8f70ea66)

...

Digest: sha256:970063dc897f5195e7ce81a0bb2b99e58ac26468e0784792bd338dabf9b9e18f

Status: Image is up to date for 0x74696d/containerbuddy-demo-nginx:latest

Pulling app (0x74696d/containerbuddy-demo-app:latest)...

latest: Pulling from 0x74696d/containerbuddy-demo-app (req 658a7b90-78e0-11e5-a6a0-e97a8f70ea66)

...

Digest: sha256:f8ef4988fea7da5d518e777e2a269e1feb5282fa335376290066a5c57a543127

Status: Image is up to date for 0x74696d/containerbuddy-demo-app:latest

Starting Consul.

Creating example_consul_1...

Writing template values to Consul at 72.2.119.22

true

Opening consul console

echo 'Starting Consul.'

docker-compose -p example up -d consul

# get network info from consul. alternately we can push this into

# a DNS A-record to bootstrap the cluster

CONSUL_IP=$(docker inspect example_consul_1 \

| json -a NetworkSettings.IPAddress)

echo "Writing template values to Consul at ${CONSUL_IP}"

curl --fail -s -X PUT --data-binary @./nginx/default.ctmpl \

http://${CONSUL_IP}:8500/v1/kv/nginx/template

echo 'Opening consul console'

open http://${CONSUL_IP}:8500/ui

Starting application servers and Nginx

example_consul_1 is up-to-date

Creating example_nginx_1...

Creating example_app_1...

Waiting for Nginx at 72.2.115.34:80 to pick up initial configuration.

...................

Opening web page... the page will reload every 5 seconds with any updates.

Try scaling up the app!

docker-compose -p example scale app=3

echo 'Starting application servers and Nginx'

docker-compose -p example up -d

# get network info from Nginx and poll it for liveness

NGINX_IP=$(docker inspect example_nginx_1 \

| json -a NetworkSettings.IPAddress)

echo "Waiting for Nginx at ${NGINX_IP} to pick up initial configuration."

while :

do

sleep 1

curl -s --fail -o /dev/null "http://${NGINX_IP}/app/" && break

echo -ne .

done

echo

echo 'Opening web page... the page will reload every 5 seconds'

echo 'with any updates.'

open http://${NGINX_IP}/app/

Does it blend scale?

$ docker-compose -p example scale app=3

Creating and starting 2... done

Creating and starting 3... done

Demo App

| The Old Way | The Container-Native Way |

|---|---|

| Extra network hop from LB or local proxy | Direct container-to-container commmunication |

| NAT | Containers have their own IP |

| DNS TTL | Topology changes propogate immediately |

| Health checks in the LB | Applications report their own health |

| Two build & orchestration pipelines | Focus on your app alone |

| VMs | Secure multi-tenant bare-metal |

http://0x74696d.com/talk-containerdays-nyc-2015/#/

http://0x74696d.com/talk-containerdays-nyc-2015/#/